Use a secure hub-spoke network architecture and Azure Policies to enforce the use of Private Endpoints in a hub’s centralized, private DNS zone.

Security is a leading concern as enterprises adopt hybrid cloud strategies and a challenging one at that. At SNP Technologies, we have hybrid security solutions to meet the stringent security requirements of our customers.

In this article, we highlight the scenario wherein the organization has adopted Azure managed resources, such as Azure SQL Database and Azure App Service, in their hybrid cloud solution architecture. These so-called “platform-as-a-services” resources (or PaaS for short) are exposed to the public internet by default.

Hence, the challenge is how to reign in the PaaS resources, so their traffic only flows over the organization’s private network. The solution entails the integration of DNS zones with private endpoints and the use of government policies to enforce the security configuration for each PaaS resource added to the network.

First, we discuss a recommended network architecture to fulfill this requirement. Then we provide examples of governance policies designed by SNP that enforce secure practices for private IP range integration and name resolution. These methods solve many hybrid cloud solution architecture concerns, like:

- Configuring a Hub & Spoke network model with an Azure private DNS zone

- Handling the redirect of DNS queries originating from on-premises to an Azure private DNS zone via a private IP

- Providing an Azure Virtual Network private IP for Azure managed (PaaS) resources (e.g., Azure SQL, App Service)

- Connecting Azure PaaS resources to Azure private DNS zones for DNS resolution

- Blocking public endpoints on Azure PaaS resources

- Deploying PaaS resources on different subscriptions within the same tenant

Networking Solution

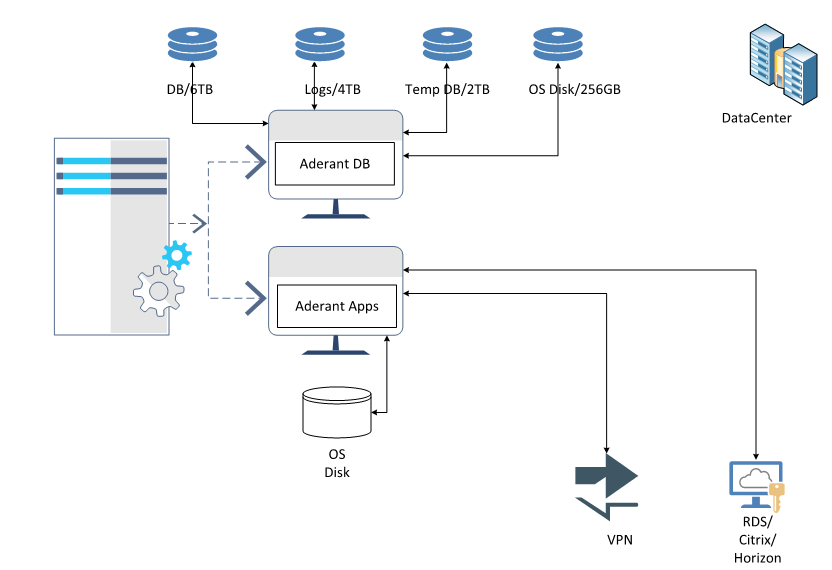

Figure 1 illustrates the architecture designed by SNP engineers to secure a hybrid cloud having PaaS resources. This example has an Azure SQL database and the architecture features:

- For the on-premises network, the Active Directory DNS servers are configured with conditional forwarders for each private endpoint public DNS zone, such as *.database.windows.net* and *.windows.net*. These are then pointed to the DNS server hosted in the Hub VNet in Azure.

- The DNS server hosted in the hub VNet on Azure uses the Azure-provided DNS resolver (168.63.129.16) as a forwarder.

- The virtual network used as a hub VNet is linked to the Private DNS zone for Azure services names, such as privatelink.database.windows.net.

- The spoke virtual network is only configured with hub VNet DNS servers and will send requests to DNS servers.

- When the DNS servers hosted on Azure VNet are not the authoritative Active Directory domain names, conditional forwarders for the private link domains are set up on on-premises DNS servers pointing to the azure DNS forwarders.

Figure 1

Governance Solution

A ensure private networking for PaaS resources, the following conditions should be met:

- The PaaS resource has a private endpoint, not a public endpoint

- A DNS record for the PaaS resource is entered in the central, private DNS zone for the entire network

Below we describe three policies that work together to ensure these conditions are met.

Please note that the policies are customized and not built-in Azure policies (e.g. Azure Policy samples). In the list of resources provided at the end of this article is a link to a tutorial on how to create a custom policy definition in Azure.

Policy 1: Disable public endpoint for PaaS services

Why: Access to endpoints are by default accessible over the public internet.

How: This policy prevents users from creating Azure PaaS services with public endpoints and invokes an error if the private endpoint is not configured at resource creation.

Note: In Azure, the resource that enables the private endpoint is Azure Private Link. Please refer to the Resources section at the end of this article for links to related Azure documentation.

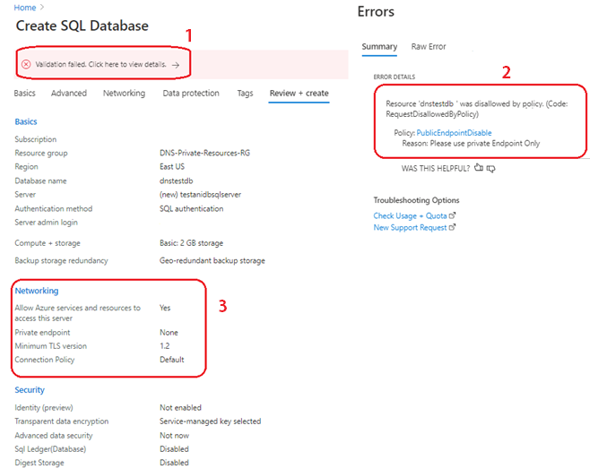

Figure 2 depicts the Azure Portal screen when the policy criteria is not met:

1. Validation fails because of the governance policy

2. Error Details indicate the Azure Policy that disallows the Public Endpoint creation

3. In the Networking section we see that “Private endpoint” setting is set to “None”

4. Once the Private endpoint is added, the policy validation passes (Figure 3)

Figure 2

Figure 3

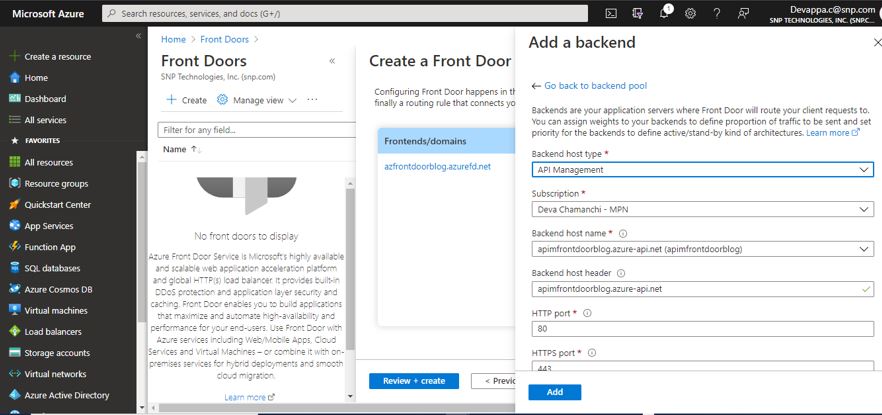

Policy 2: Deny creation of a private DNS zone with a Private Link prefix

Why: By default, when you create a private endpoint, a private DNS zone is created on each spoke subscription.

As a centralized DNS with a conditional forwarder and private DNS zones is used in our architecture, we need to prevent the user from creating their own Private Link, private DNS zones for each new resource added to the network. If ungoverned, sprawl would occur.

How: This policy prevents creation of a private DNS zone with a Private Link prefix in the spoke subscriptions. With Policy 3 that follows, we associate the newly created resource with a central, private DNS zone already in the hub.

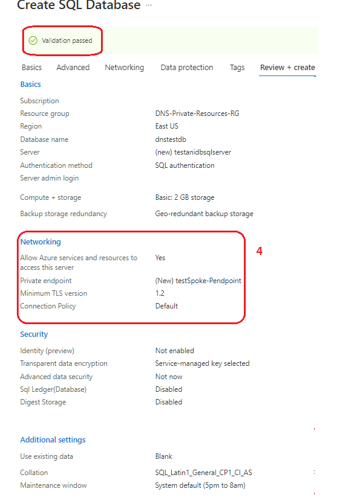

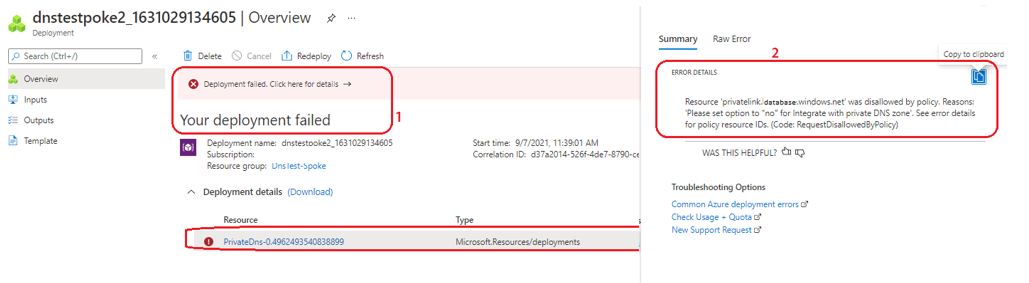

Figure 4 shows the Azure Portal screen when the policy criteria is not met, and user tries to deploy a DNS zone for a Private Link.

1. Deployment fails due to policy

2. Error Details shows the Azure Policy that denied creation of resource and the reason

Figure 4

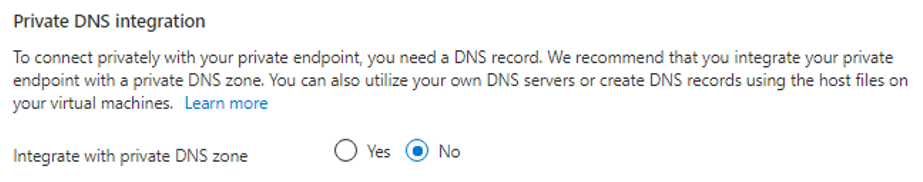

To avoid the deployment error, during resource creation, users must set the “Integrate with private DNS zone” to “No” (Figure 5).

Figure 5

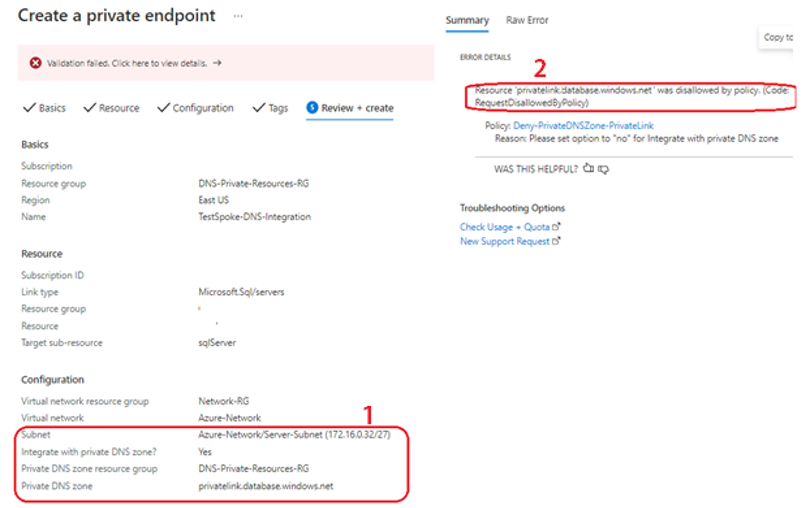

If the user tries to create a private endpoint with Private link integration, then the policy will deny creation of the resource during validation as depicted in Figure 6, the Azure Portal resource creation screen when the “Integrate with DNS private zone?” setting is set to “Yes”.

1. Integrate with Private DNS Zone is set to “Yes”.

2. Error details reference the policy that denied creation of resource, and reason.

Figure 6

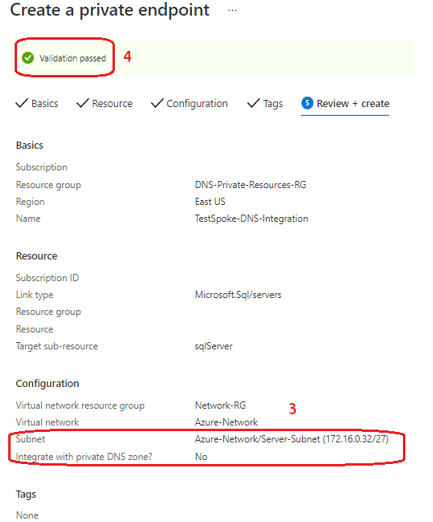

Figure 7 depicts the Azure Portal screen when the “Integrate with DNS private zone?” setting is set to “No”.

3. The setting is observed in the Networking configuration

4. Policy validation passes

Figure 7

Policy 3: “Deploy If Not Exists” policy to automate DNS entries

Why: As described above, since the “Integrate with DNS private zone?” setting is set to “No”, a DNS zone for the Private Link is not created. Therefore, we need to have a method to integrate the Private Link with the centralized DNS zone of the hub. Out of the box, Azure does not provide this option during resource creation.

How: We use a Remediation policy to automate the DNS entry. Within Azure, resources that are non-compliant to a deployIfNotExists policy can be put into a compliant state through Remediation.

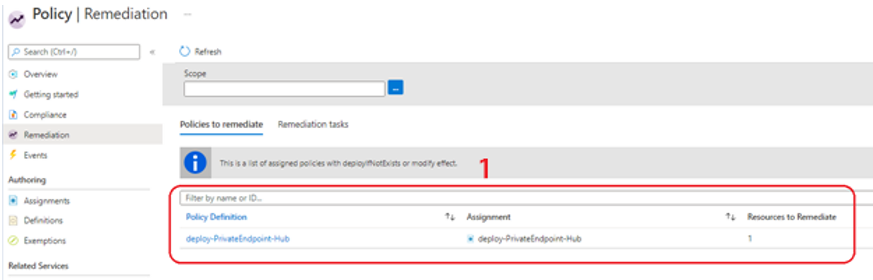

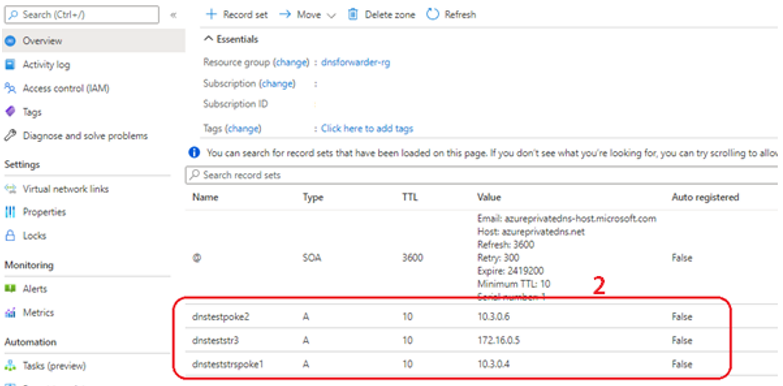

The Azure portal screen captures below depict the policy remediation plan:

1. In Figure 8 we see the policy to remediate. The Remediation task is to automatically add the Azure Resource DNS record to the central private DNS zone.

2. Figure 9 shows that the remediation policy successfully added the DNS entries on the private DNS zone for the respective Private Link DNS records.

Figure 8

Figure 9

Conclusion

In this article we have shown how one can securely deploy Azure PaaS resources with private endpoints. While thoughtful hybrid network planning is a given, Azure governance is an ingredient for success that is often overlooked. We hope you explore the resources provided below to learn more about Azure Private Link, how DNS in Azure is managed and how Azure Policy can automate the governance of resource creation once the network and security foundation is in place. Contact SNP Technologies here.

Resources

- What is Azure Private Link?

- What is Azure DNS?

- What is Azure Private DNS?

- What is an Azure DNS private zone

- Overview of Azure Policy – Azure Policy

- Tutorial: Create a custom policy definition – Azure Policy

- Remediate non-compliant resources – Azure Policy