Data estates are becoming increasingly heterogeneous as data grows exponentially and spreads across data centers, edge devices, and multiple public clouds. In addition to the complexity of managing data across different environments, the lack of a unified view of all the assets, security and governance presents an additional challenge.

Leveraging the cloud for your SQL infrastructure has many benefits like cost reduction, driving productivity, accelerating insights and decision-making can make a measurable impact on an organization’s competitiveness, particularly in uncertain times. While infrastructure, servers, networking, etc. all by default are maintained by the cloud provider.

With SQL servers 2008 and 2012 reaching their end-of-life, it is advisable to upgrade them or migrate them to Azure cloud services. Modernizing any version of SQL server to Azure brings up many added benefits, including:

- Azure PaaS provides 99.99% availability

- Azure IaaS provides 99.95% availability

- Extended security updates for 2008, 2012 servers

- Backing up SQL Server running in Azure VMs is made easy with Azure Backup, a stream-based, specialized solution. The solution aligns with Azure Backup’s long-term retention, zero infrastructure backup, and central management features.

Tools leveraged

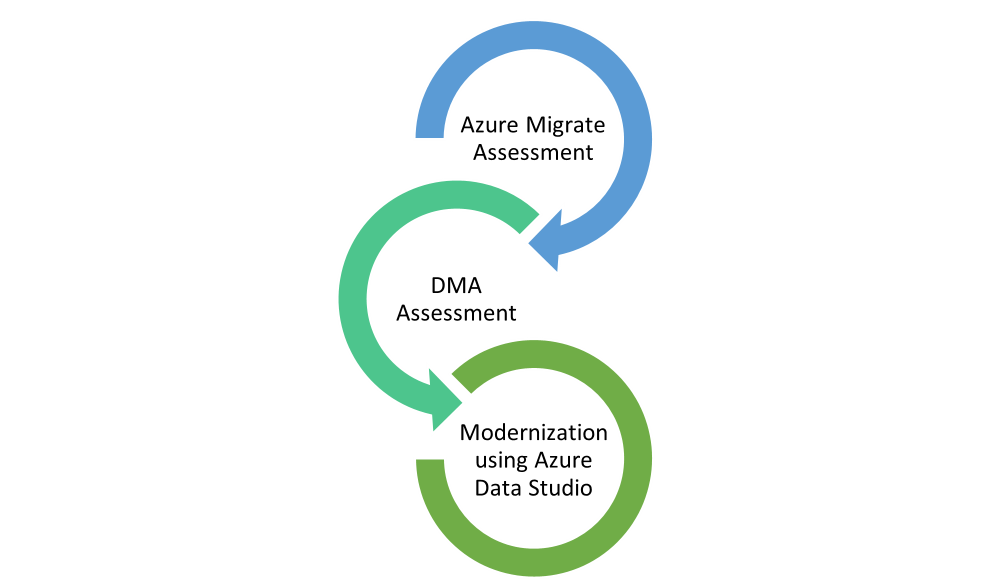

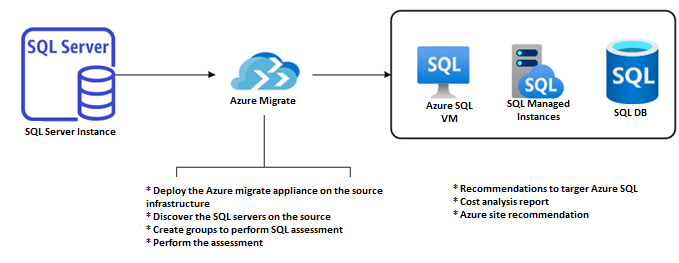

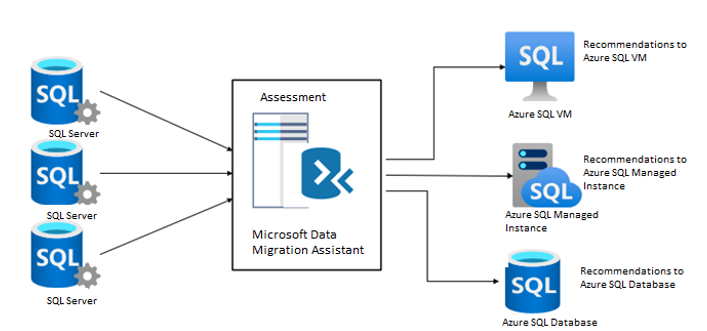

For modernizing the SQL infrastructure, SNP leveraged a variety of tools from Microsoft, such as the following.

- The Azure Database Migration Service has been used since the beginning to modernize on-premises SQL servers. Using this tool, you can migrate your data, schema, and objects from multiple sources to Azure at scale, while simplifying, guiding, and automating the process.

- Azure Data Studio is one of the newest tools for modernizing SQL infrastructure with an extension of Azure SQL Migration. It’s designed for data professionals who run SQL Server and Azure databases on-premises and in multi cloud environments.

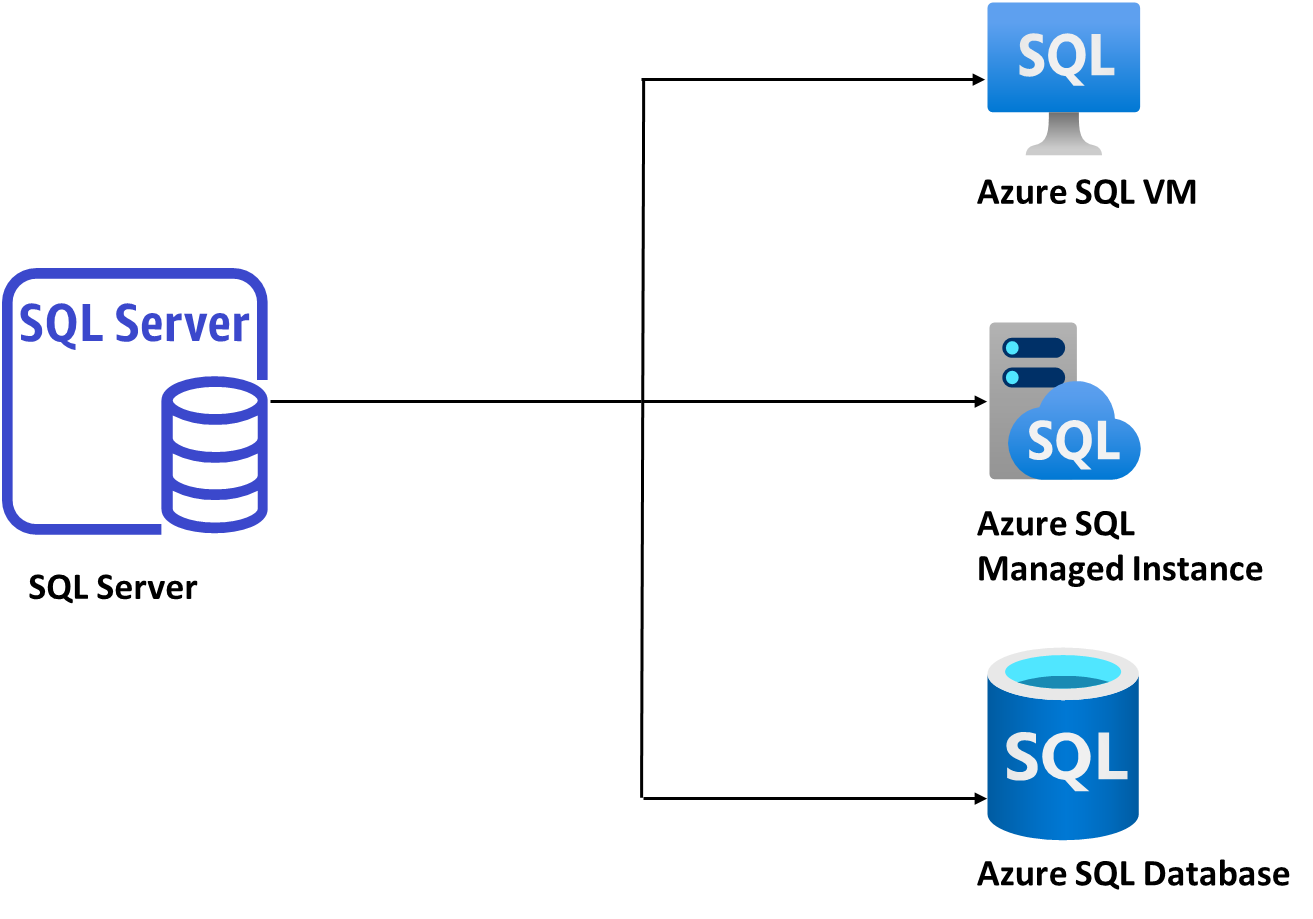

Potential reference architecture diagram

Let’s take a closer look at the architecture, what components are involved and what is being done in Azure Data Studio to migrate or modernize the on-premises SQL infrastructure.

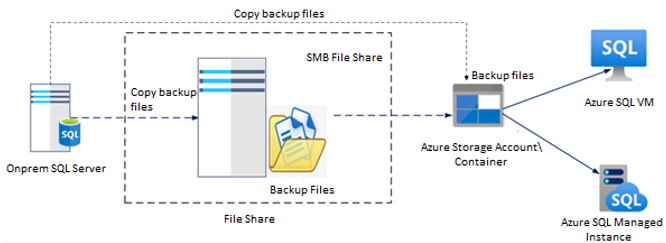

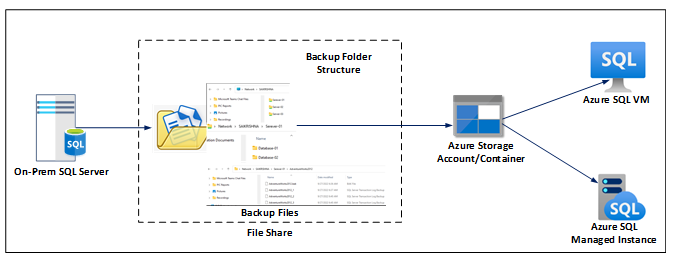

Among the components of Azure data studio are the source to be modernized, the destination where the on-premises SQL must be moved, and the staging layer for the backup files. Backup files are a major component of modernization.

There are various components that are involved in the Azure Data Studio migration or modernization- Source SQL server. The on-premise SQL server which is to be modernized/migrated, Destination Server- The Azure SQL VM to which the on-prem SQL server will be moved, and the staging layer (Storage Account or the Network Share Folder) for the backup files. Backup files are a major component of modernization.

Azure Data Studio and Azure SQL Migration primarily rely on backup files. It uses a full backup of the database as well as transactional log backups. Another important component is the staging layer, where backup files will be stored.

Microsoft Azure Data Studio uses a network share folder, an Azure storage container, or an Azure file. There must be a specific structure or order in which backup files are placed in either of the places. As shown in the below architecture, backup files specific to the Database must be placed in their own folders or containers.

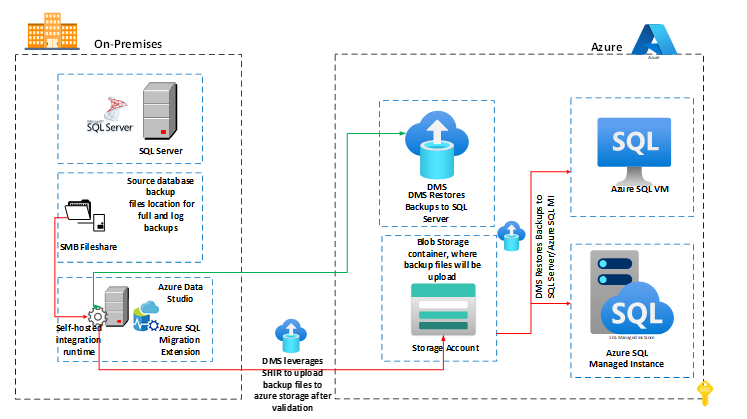

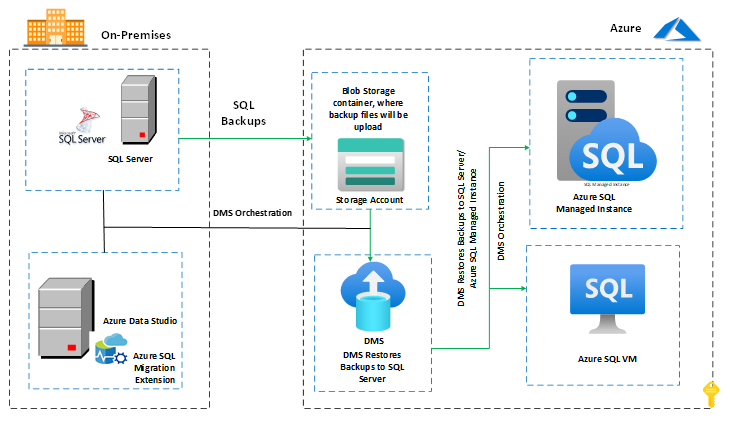

As part of the migration to Azure, Azure Data Studio along with the Azure SQL Migration extension utilizes a technology called Data Migration Service, which is the core technology behind the scenes. It has also been integrated with Azure Data Factory, which runs the pipeline at regular intervals to copy the backup files from the on-prem network share folder to Azure thereby restoring them on the target or restoring them if they are in containers.

When the backup files are in a network share folder, Azure Data Studio uses Self Hosted Integration Run time to establish a connection between on-premises and Azure. After the connection has been established, the Azure Data Studio begins the modernization process leveraging Azure DMS.

Initially, all full and subsequent transactional log backup files of the databases are placed in a specified database folder or database container. Azure Data Studio copies backup files from network share folders to Azure storage containers if the backup files are in a network share folder.

Following this, Azure Data Studio restores them to the target Azure SQL VM or Azure SQL Managed Instance while Azure Data Studio directly restores backup files from the storage account to the Azure target if the backup files are stored in the storage account.

Following the completion of the last log restoration on the target Azure SQL database, we need to cut over the database and bring it online on the target. The databases will be placed in the Restoring mode during the restoration of the backup files, which means that we will not be able to access them until the cutover has been completed.

Your next steps

If you like what you have read so far, let’s move forward together with confidence. We are here to help at every step. Contact SNP’s migration experts.